We find Holan's claim laughable.

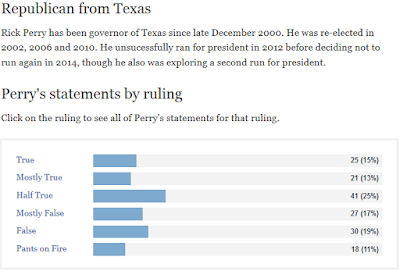

We often see PolitiFact's readers expressing their confusion on FaceBook. The Donald Trump presidential candidacy, predictably accompanied by PolitiFact highlighting Trump's PolitiFact "report card" offers us yet anther opportunity to survey the effects of PolitiFact's deception.

- "65% False or Pants on Fire.

He's a FoxNews dream candidate" - "admittedly, 14 is a small sample size but 0% true!"

- "Only 14 % Mostly True ??? 86 % from Half true to Pants on Fire - FALSE !!! YES - THIS IS THE KIND OF PERSON WE NEED IN THE WHITE HOUSE."

- "0% true? Now, why doesn't that surprise me?"

- "True zero percent of the time - zero. Great word, zero. Sums up this man's essence well I think"

- "I believe this is the worst record I've seen?"

- "Wow 0% true"

- "Not one single true . sounds right"

- "Profile of a habitual LIAR !"

- "Just what we need another republican that can't tell the truth !!"

- "His record is better than I expected, but still perfectly abysmal."

- "I realize that Politifact doesn't check everything politicians say, but zero percent true? That's outrageous."

- "You'd think the GOP would disqualify him for never telling the whole truth."

- "Zero percent "true"!"

- "He should do well with a record like that, lol!"

- "Not a great record in the accuracy department."

- "Never speaks the truth!!!!"

- "This looks like the record of a pathological liar."

People who believe these percentages are the fools. It's a well known thing called "selection bias". It refers to the FACT they have not checked every statement he has made just a very few. The percentages given are from those their [sic] selected. It's a well known way to lie about someone and is commonly used by left wing media and is meant to inflame their base to believe everything they read that agrees with their own beliefs."There's not a lot of reader confusion out there." Right. Sure.