PolitiFact has egg on its face worthy of the

Great Elephant Bird of Madagascar.

On May 23, PolitiFact published an embarrassingly shallow and flawed fact check

of two related claims from a viral Facebook post. One of the claims held false a claim from Mitt Romney that President Obama has presided over an acceleration of government spending unprecedented in recent history. The second claim, quoted from Rex Nutting of MarketWatch, held that "Government spending under Obama, including his signature stimulus bill, is rising at a 1.4 percent annualized pace — slower than at any time in nearly 60 years."

PolitiFact issued a "Mostly True" rating to these claims, claiming their math confirmed select portions of Nutting's math.

The Associated Press and

Glenn Kessler of the Washington Post, among others, gave Nutting's calculations very unfavorable reviews.

PolitiFact responded with an article titled "Lots of heat and some light," quoting some of the criticisms without comment other than to insist that they did not justify any change in the original "Mostly True" rating.

PolitiFact claimed its rating was defensible since it only incorporated part of Nutting's article.

(O)ur item was not actually a fact-check of Nutting's entire column. Instead, we rated two elements of the Facebook post together -- one statement drawn from Nutting’s column, and the quote from Romney.

I noted at that point that we could look forward to the day when PolitiFact would have to reveal its confusion in future treatments of the claim.

We didn't have to wait too long.

On May 31, last Thursday

, PolitiFact gave us

an addendum to its original story. It's an embarrassment.

PolitiFact gives some background for the criticisms it received over its rating. There's plenty to criticize there, but let's focus on the central issue: Was PolitiFact's "Mostly True" ruling defensible? Does this defense succeed?

The biggest reason this CYA fails

PolitiFact keeps excusing its rating by claiming it focuses on the

Facebook post by "Groobiecat", rather than Nutting's article, and only fact checks the one line from Nutting included in the Facebook graphic.

Here's the line again:

Government spending under Obama, including his signature stimulus bill, is rising at a 1.4 percent annualized pace — slower than at any time in nearly 60 years.

This claim figured prominently in the AP and Washington Post fact checks mentioned above. The rating for the other half of the Facebook post (on Romney's claim) relies on this one.

PolitiFact tries to tell us, in essence, that Nutting was right on this point despite other flaws in his argument (such as the erroneous 1.4 percent figure embedded right in the middle), at least sufficiently to show that Romney was wrong.

A fact check of the Facebook graphic should have looked at Obama's spending from the time he took office until Romney spoke. CBO projections should have

nothing to do with it. The fact check should attempt to pin down the term "recent history" without arbitrarily deciding its meaning.

The two claims should have received their own fact checks without combining them into a confused and misleading whole. In any case, PolitiFact flubbed the fact check as well as the follow up.

Spanners in the works

As noted above, PolitiFact simply ignores most of the criticisms Nutting received. Let's follow along with the excuses.

PolitiFact:

Using and slightly tweaking Nutting’s methodology, we recalculated spending increases under each president back to Dwight Eisenhower and produced tables ranking the presidents from highest spenders to lowest spenders. By contrast, both the Fact Checker and the AP zeroed in on one narrower (and admittedly crucial) data point -- how to divide the responsibility between George W. Bush and Obama for the spending that occurred in fiscal year 2009, when spending rose fastest.

Stay on the lookout for specifics about the "tweaking."

I'm still wondering why PolitiFact ignored the poor foundation for the 1.4 percent average annual increase figure the graphic quotes from Nutting. But no matter. Even if we let PolitiFact ignore it in favor of "slower than at any time in nearly 60 years" the explanation for their rating is doomed.

PolitiFact:

(C)ombining the fiscal 2009 costs for programs that are either clearly or arguably Obama’s -- the stimulus, the CHIP expansion, the incremental increase in appropriations over Bush’s level and TARP -- produces a shift from Bush to Obama of between $307 billion and $456 billion, based on the most reasonable estimates we’ve seen critics offer.

The fiscal year 2009 spending figure from the Office of Management and Budget was $3,517,677,000,000. That means that $307 billion (there's a tweak!) is 8.7 percent of the 2009 total spending. And it means before Obama even starts getting blamed for any spending he increased spending in FY 2009 over the 2008 baseline more than President Bush did. I still don't find it clear where PolitiFact puts that spending on Obama's account.

(B)y our calculations, it would only raise Obama’s average annual spending increase from 1.4 percent to somewhere between 3.4 percent and 4.9 percent. That would place Obama either second from the bottom or third from the bottom out of the 10 presidents we rated, rather than last.

PolitiFact appears to say its calculations suggest that accepting the critics' points makes little difference. We'll see that isn't the case while also discovering a key criticism of the "annual spending increase" metric.

Reviewing PolitiFact's calculations from earlier in its original story, we see that PolitiFact averages Obama's spending using fiscal years 2010 through 2013. However, in this update PolitiFact apparently does not consider another key criticism of Nutting's method: He cherry picked future projections. Subtract $307 billion from the FY 2009 spending and the increase in FY 2010 ends up at 7.98 percent. And where then do we credit the $307 billion?

If we attribute that $140 billion in stimulus to Obama and not to Bush, we find that spending under Obama grew by about $200 billion over four years, amounting to a 1.4% annualized increase.

Neither does PolitiFact:

(C)ombining the fiscal 2009 costs for programs that are either clearly or arguably Obama’s -- the stimulus, the CHIP expansion, the incremental increase in appropriations over Bush’s level and TARP -- produces a shift from Bush to Obama of between $307 billion and $456 billion, based on the most reasonable estimates we’ve seen critics offer.

That’s quite a bit larger than Nutting’s $140 billion, but by our calculations, it would only raise Obama’s average annual spending increase from 1.4 percent to somewhere between 3.4 percent and 4.9 percent.

But where does the spending go once it is shifted? Obama's 2010? It makes a difference.

"Lies, damned lies, and statistics": PolitiFact, Nutting and the improper metric

|

| Click image for larger view |

The graphic embedded to the right helps illustrate the distortion one can create using the average increase in spending as a key statistic. Nutting probably sought this type of distortion deliberately, and it's shameful for PolitiFact to overlook it.

Using an annual average for spending allows one to make much higher spending not look so bad. Have a look at the graphic to the right just to see what it's about, then come back and pick up the reading. We'll wait.

Boost spending 80 percent in your first year (A) and keep it steady thereafter and you'll average 20 percent over four years. Alternatively, boost spending 80 percent just in your final year (B) and you'll also average 20 percent per year. But in the first case you'll have spent far more money--$2,400 more over the course of four years.

It's very easy to obscure the amount of money spent by using a four-year average. In case A spending increased by a total of $3,200 over the baseline total. That's almost $800 more than the total derived from simply increasing spending 20 percent each year (C).

Note that in the chart each scenario features the same initial baseline (green bar), the same yearly average increase (red star), and widely differing total spending over the baseline (blue triangle).

Some of Nutting's conservative critics used combined spending over four-year periods to help refute his point. Given the potential distortion from using the average annual increase it's very easy to understand why. Comparing the averages for the four year total smooths out the misleading effects highlighted in the graphic.

We have no evidence that PolitiFact noted any of this potential for distorting the picture. The average percentage increase should work just fine, and it's simply coincidence that identical total increases in spending look considerably lower when the largest increase happens at the beginning (example A) than when it happens at the end (example B).

Shenanigan review:

- Yearly average change metric masks early increases in spending

- No mention of the effects of TARP negative spending

- Improperly considers Obama's spending using future projections

- Future projections were cherry-picked

The shift of FY 2009 spending from TARP, the stimulus and other initiatives may also belong on the above list, depending on where PolitiFact put the spending.

I have yet to finish my own evaluation of the spending comparisons, but what I have completed so far makes it appear that Romney may well be right about Obama accelerating spending faster than any president in recent history (at least back through Reagan). Looking just at percentages on a year-by-year basis instead of averaging them shows Obama's first two years allow him to challenge Reagan or George W. Bush as the biggest accelerator of federal spending in recent history. And that's using PolitiFact's $307 billion figure instead of the higher $456 billion one.

So much for PolitiFact helping us find the truth in politics.

Note:

I have a

spreadsheet on which I am performing calculations to help clarify the issues surrounding federal spending and the Nutting/PolitiFact interpretations. I hope to produce an explanatory graphic or two in the near future based on the eventual numbers. Don't expect all the embedded comments on the sheet to make sense until I finalize it (taking down the "work in progress" portion of the title).

Jeff adds:

It's not often PolitiFact admits to the subjective nature of their system, but here we have a clear case of editorial judgement influencing the outcome of the "fact" check:

Our extensive consultations with budget analysts since our item was published convinces us that there’s no single "correct" way to divvy up fiscal 2009 spending, only a variety of plausible calculations.

This tells us that PolitiFact arbitrarily chose the "plausible calculation" that was

very favorable to Obama in its original version of the story. By using other

equally plausible methods, the rating would have gone down. By presenting this

interpretation of the calculations as objective fact, PolitiFact misleads their readers into believing the debate is settled.

This update also contradicts PolitiFact's reasons for the "Mostly True" rating:

So the second portion of the Facebook claim -- that Obama’s spending has risen "slower than at any time in nearly 60 years" -- strikes us as Half True. Meanwhile, we would’ve given a True rating to the Facebook claim that Romney is wrong to say that spending under Obama has "accelerated at a pace without precedent in recent history." Even using the higher of the alternative measurements, at seven presidents had a higher average annual increases in spending. That balances out to our final rating of Mostly True.

In the update, they're telling readers a portion of the Facebook post is Half-True, while the other portion is True, which balances out to the final Mostly True rating. But that's not what they said in the first rating (bold emphasis added):

The only significant shortcoming of the graphic is that it fails to note that some of the restraint in spending was fueled by demands from congressional Republicans. On balance, we rate the claim Mostly True.

In the first rating, it's knocked down because it doesn't give enough credit to the GOP for restraining Obama. In the updated version of the "facts", it's knocked down because of a "balance" between two portions that are Half-True and completely True. There's no mention of how the GOP's efforts affected the rating in the update.

Their attempts to distance themselves from Nutting's widely debunked article are also comically dishonest:

The Facebook post does rely partly on Nutting’s work, and our item addresses that, but we did not simply give our seal of approval to everything Nutting wrote.

That's what PolitiFact is saying now. But in the original article PolitiFact was much more approving:

The math simultaneously backs up Nutting’s calculations and demolishes Romney’s contention.

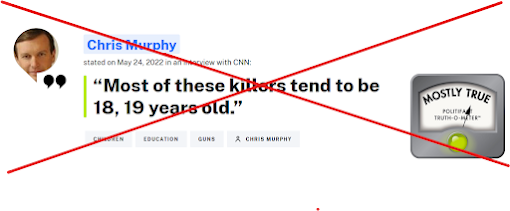

And finally, we still have no explanation for the grossly misleading headline graphic, first

pointed out by Andrew Stiles:

Neither Nutting or the original Groobiecat post claim Obama had the "lowest spending record". Both focused on the growth

rate of spending. This spending

record claim is PolitiFact's invention, one the fact check does not address. But it sure looks nice right next to the "Mostly True" graphic, doesn't it?

Sorting out the truth, indeed.

The bottom line is PolitiFact's CYA is hopelessly flawed, and offensive to anyone that is sincerely concerned with the truth. A fact checker's job is to illuminate the facts. PolitiFact's efforts here only obfuscate them.

Bryan adds:

Great points by Jeff across the board. The original fact check was indefensible and the other fact checks of Nutting by the mainstream media probably did not go far enough in calling Nutting onto the carpet. PolitiFact's attempts to glamorize this pig are deeply shameful.

Update: Added background color to embedded chart to improve visibility with enlarged view.

Correction 6/4/2012: Corrected one instance in which PolitiFact's $307 billion figure was incorrectly given as $317 billion. Also changed the wording in a couple of spots to eliminate redundancy and improve clarity, respectively.