When PolitiFact unpublished its Sept. 1, 2017 fact check of a claim attacking Alabama Republican Roy Moore, we had our red flag to look into the story. Taking down a published story itself runs

against the current of journalistic ethics, so we decided to keep an eye on things to see what else might come of it.

We were rewarded with a smorgasbord of questionable actions by PolitiFact.

Publication and Unpublication

PolitiFact's Sept. 1, 2017 fact check found it "Mostly False" that Republican Roy Moore had taken $1 million from a charity he ran to supplement his pay as as Chief Justice in the Supreme Court of Alabama.

We have yet to read the original fact check, but we know the summary thanks to PolitiFact's Twitter confession issued later on Sept. 1, 2017:

We tweeted criticism of PolitiFact for not making an archived version of the fact check immediately available and for not providing an explanation for those who ended up looking for the story only to find a 404-page-not found-error. We think readers should not have to rely on Twitter to know what is going on with the PolitiFact website.

John Kruzel takes tens of thousands of dollars from PolitiFact

(a brief lesson in misleading communications)

The way editors word a story's title, or even a subheading like the one above, makes a difference.

What business does John Kruzel have "taking" tens of thousands of dollars from PolitiFact? The answer is easy: Kruzel is an employee of PolitiFact, and PolitiFact pays Kruzel for his work. But we can make that perfectly ordinary and non-controversial relationship look suspicious with a subheading like the one above.

We have a parallel in the fact check of Roy Moore. Moore worked for the charity he ran and was paid for it. Note the title PolitiFact chose for its fact check:

Did Alabama Senate candidate Roy Moore take $1 million from a charity he ran?

"Mostly True." Hmmm.

Kruzel wrote the fact check we're discussing. He did not necessarily compose the title.

We think it's a bad idea for fact-checkers to engage in the same misleading modes of communication they ought to criticize and hold to account.

Semi-transparent Transparency

For an organization that advocates transparency, PolitiFact sure relishes its semi-transparency. On Sept. 5, 2017, PolitiFact published an explanation of its correction but rationed specifics (bold emphasis added in the second instance):

Correction: When we originally reported this fact-check on Sept. 1, we were unable to determine how the Senate Leadership Fund arrived at its figure of "over $1 million," and the group didn’t respond to our query. The evidence seemed to show a total of under $1 million for salary and other benefits. After publication, a spokesman for the group provided additional evidence showing Moore received compensation as a consultant and through an amended filing, bringing the total to more than $1 million. We have corrected our report, and we have changed the rating from Mostly False to Mostly True.

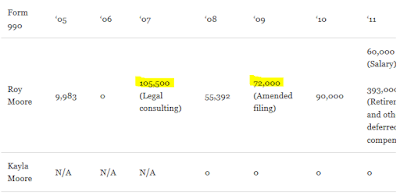

PolitiFact included a table in its fact check showing relevant information gleaned from tax documents. Two of the entries were marked as for consulting and as an amended filing, which we highlighted for our readers:

Combining the two totals gives us $177,500. Subtracting that figure from the total PolitiFact used in its corrected fact check, we end up with $853,375.

The Senate Leadership Fund PAC (Republican) was off by a measly 14.7 percent and got a "Mostly False" in PolitiFact's original fact check? PolitiFact often

barely blinks over much larger errors than that.

Take

a claim by Sen. Brad Schneider (D-Ill.) from April 2017, for example. The fact check was published under the "PolitiFact Illinois" banner, but PolitiFact veterans Louis Jacobson and Angie Drobnic Holan did the writing and editing, respectively.

Schneider said that the solar industry accounts for 3 times the jobs from the entire coal mining industry. PolitiFact said the best data resulted in a solar having a 2.3 to 1 job advantage over coal, terming 2.3 "just short of three-to-one" and rating Schneider's claim "Mostly True."

Schneider's claim was off by over 7 percent even if we credit 2.5 as 3 by rounding up.

How could an error of under 15 percent have dropped the rating for the Senate Leadership Fund's claim all the way down to "Mostly False"?

We examine that issue next.

Compound Claim, Or Not?

PolitiFact recognizes in its statement of principles that sometimes claims have more than one part:

We sometimes rate compound statements that contain two or more factual assertions. In these cases, we rate the overall accuracy after looking at the individual pieces.

We note that if PolitiFact does not weight the individual pieces equally, we have yet another area where subjective judgment might color "Truth-O-Meter" ratings.

Perhaps this case qualifies as one of those subjectively skewed cases.

The ad attacking Moore looks like a clear compound claim. As PolitiFact puts it (bold emphasis added), "

In addition to his compensation as a judge, "Roy Moore and his wife (paid themselves) over $1 million from a charity they ran."

PolitiFact found the first part of the claim flatly false (bold emphasis added):

He began to draw a salary from the foundation in 2005, two years after his dismissal from the bench, according to the foundation’s IRS filings. So the suggestion he drew the two salaries concurrently is wrong.

Without the damning double dipping, the attack ad is a classic deluxe nothingburger with nothingfries and a super-sized nothingsoda.

Moore was ousted as Chief Justice in the Alabama Supreme Court, where he could have expected a raise up to

$196,183 per year by 2008. After that ouster Moore was paid a little over $1 million over a nine-year period, counting his wife's salary for one year, getting well under $150,000 per year on average. On what planet is that not a pay cut? With the facts exposed, the attack ad loses all coherence.

Where is the "more" that serves as the theme of the ad?

We think the fact checkers lost track of the point of the ad somewhere along the line. If the ad was just about what Moore was paid for running his charity while not doing a different job at the same time, it's more neutral biography than attack ad. The main point of the attack ad was Moore supplementing his generous salary with money from running a charitable (not-for-profit) organization. Without that main point, virtually nothing remains.

PolitiFact covers itself with shame by failing to see the obvious. The original "Mostly False" rating fit the ad pretty well regardless of whether the ad correctly reported the amount of money Moore was paid for working at a not-for-profit organization.

Assuming PolitiFact did not confuse itself?

If PolitiFact denies making a mistake by losing track of the point of the ad, we have another case that helps amplify the point we made with our post on Sept. 1, 2017. In that post, we noted that PolitiFact graded one of Trump's claims as "False" based on not giving Trump credit for his underlying point.

PolitiFact does not address the "underlying point" of claims in a consistent manner.

In our current example, the attack ad on Roy Moore gets PolitiFact's seal of "Mostly" approval only by ignoring its underlying point. The ad actually misled in two ways, first by saying Moore was supplementing his income as judge with income from his charity when the two source of income were not concurrent, and secondly by reporting the charity income while downplaying the period of time over which that income was spread. Despite the dual deceit, PolitiFact graded the claim "Mostly True."

Cases like this support our argument that PolitiFact tends to base its ratings on subjective judgments. This case also highlights a systemic failure of transparency at PolitiFact.

We will update this item if PolitiFact surprises us by running a second correction.

Afters

On top of problems we described above, PolitiFact neglected to tag its revised/republished story with the "Corrections and Updates" tag its says it uses for all corrected or updated stories.

PolitiFact has a poor record of following this part of its corrections policy.

We note, however, that after we pointed out the problem

via Twitter and email PolitiFact fixed it without a long delay.