Have we mentioned PolitiFact is biased?

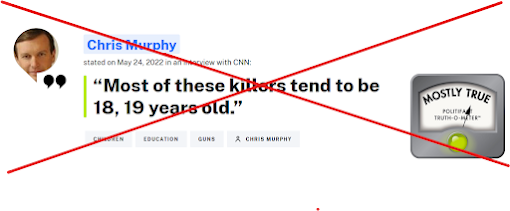

Check out this epic fail from the liberal bloggers at PolitiFact (red x added):

PolitiFact found it "Mostly True" that most of the "killers" to which Sen. Chris Murphy (D-Conn.) referred tend to be 18, 19 years old.

What's wrong with that?

Let us count the ways.

In reviewing the context, Sen. Murphy was arguing that raising the age at which a person may buy a gun would reduce school shootings. Right now that threshold stands at 18 in most states and for most legal guns, with certain exceptions.

If, as Murphy says, most school shootings come from 18 and 19-year-olds then a law moving the purchase age to 21 could potentially have quite an effect.

"Tend To Be"="Tend To Be Under"?

But PolitiFact took a curious approach to Murphy's claim. The fact checkers treated the claim as though Murphy was saying the "killers" (shooters) were 20 years old or below.

That's not what Murphy said, but giving his claim that interpretation counts as one way liberal bloggers posing as objective journalists could do Murphy a favor.

When PolitiFact checked Murphy's stats, it found half of the shooters were 16 or under:

When the Post analyzed these shootings, it found that more than

two-thirds were committed by shooters under the age of 18. The analysis

found that the median age for school shooters was 16.

So, using this criteria [sic], Murphy is correct, even slightly understating the case.

See what PolitiFact did, there?

Persons 16 and under are not 18, 19 years old. Not the way Murphy needs them to be 18, 19 years old.

If Murphy can change a law that makes it illegal for most shooters ("18, 19 years old") to buy a gun, that sounds like an effective measure. But persons 17 and under typically can't buy guns as things stand. So, for the true majority of shooters Murphy's law (pun intended?) wouldn't change their ability to buy guns. Rather it would simply remain illegal as it is now.

To emphasize, when PolitiFact found "the media age for school shooters was 16" that effectively means that most school shooters are 17 or below. That actually contradicts Murphy's claim that most are aged 18 or 19. We should expect that most are below the age of 17, in fact.

If Murphy argues for raising the age for buying a gun to 21 based on most shootings coming from persons below the age of 16, that doesn't make any sense. It doesn't make sense because it would not change anything for the majority of shooters. They can't buy guns now or under Murphy's proposed law.

Calculated Nonsense?

By spouting mealy-mouthed nonsense, Murphy succeeded laying out a narrative that gun control advocates would like to back. Murphy makes it seem that raising the gun-buying age to 21 might keep most school shooters from buying their guns.

As noted above, the facts don't back that claim. It's nonsense. But if a Democratic senator can get trusted media sources to back that nonsense, well then it becomes a compelling Media Narrative!

Strict Literal Interpretation

Under strict literal interpretation, Murphy's claim must count as false. If most school shooters are 16 years old or younger then the existence of just one 17 year-old shooter makes his claim false. Half plus one makes a majority every time.

Murphy's claim was false under strict literal (hyperliteral) interpretation.

Normal Interpretation

Normal interpretation is literal interpretation, but taking things like "raining cats and dogs" the way people (literally) understand them normally. We've reviewed how normal interpretation should work in this case. To support a legitimate argument for a higher gun-buying age, Murphy needs to do so by identifying a population that the legislation would reasonably affect. The ages Murphy named (18, 19) meeting that criterion. And, because Murphy used some language indicative of estimation ("tend to be") we can even reasonably count 20 years of age in Murphy's set.

Expanding his set down to 17 doesn't make sense because changing the gun purchase age from 18 to 21 has no effect on a 17-year-old's ability to purchase a gun at 17.

But combining the shootings from 18, 19 and 20 year-olds cannot make up "most" of the school shootings if the media age for the shooters is 16 and at least one shooter was either 17 or over 20.

Murphy's claim was false given normal (literal) interpretation.

Biased Interpretation

PolitiFact used biased interpretation. The fact checkers implicitly said Murphy meant most of the shootings came from people under the age of 18 or 19, even though that makes nonsense of Murphy's argument.

PolitiFact's biased interpretation enhanced a misleading media narrative attractive to liberals.

Coincidence?

Nah. PolitiFact is biased to the left. So we see them do this kind of thing over and over again.

So it's not surprising when PolitiFact rates a literally false statement from a Democrat as "Mostly True."

Correction June 1, 2022: Fixed a typo (we're=we've)