PolitiFact North Carolina's "Pants on Fire" rating awarded to the North Carolina Republican Party on Dec. 29, 2020 likely caps the data for our study of PolitiFact's "Pants on Fire" bias. And it gives us the opportunity to show again how PolitiFact struggles to properly apply interpretive principles when looking at Republican claims.

"Democrat Governor @RoyCooperNC has not left the Governor's Mansion since the start of the #COVID19 crisis," the party tweeted on Dec. 27.

Compared to similar claims and barbs, this particular tweet stood out.

That's PolitiFact's presentation of the GOP tweet. The only elaboration occurs in the summary section ("If Your Time Is Short") and later in the story when addressing the explanation from North Carolina GOP spokesperson Tim Wigginton.

Here's that section of the story (bold emphasis added):

Party spokesman Tim Wigginton told PolitiFact NC that the tweet is not meant to be taken literally.

"The tweet is meant metaphorically," Wigginton said, adding that it’s meant to critique the frequency of Cooper’s visits with business owners. He accused Cooper of living "in a bubble … instead of meeting with people devastated by his orders."

The NC GOP’s tweet gave no indication that the party was calling on Cooper to meet with business owners.

The part in bold is the type of line that attracts a fact checker of fact checkers.

Was there no indication the tweet was not intended literally?

It turns out that finding something worth widening the investigation merely took clicking the link to the GOP tweet.

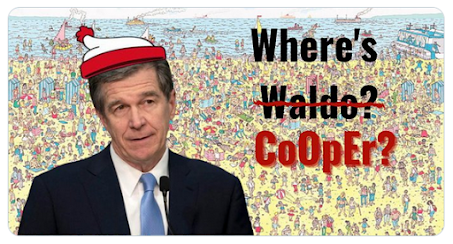

I don't see how to embed the tweet, but here's the image accompanying the tweet:

It should strike anyone, even a left-biased fact checker, that the "Where's Cooper" comical graphic is a bit of a strange marriage for the claim Cooper hasn't left the governor's mansion.

That enough isn't enough to take Wigginton at his word, perhaps, but as we noted it does point toward a need for more investigation.

It turns out that the NCRP has tweeted out the image repeatedly in late December, accompanied by a number of statements.

Twitter counts as a new literary animal. Individual tweets are necessarily short on context. Twitter users may provide context a number of ways, such a creating a thread of linked tweets. Or tweeting periodically on a theme. The NCRP "Where's Cooper?" series seems to qualify as the latter. The tweets are tied together contextually by the "Where's Cooper" image, which provides a comical and mocking approach to the series of tweets.

In short, it looks like Wigginton has support for his explanation, and the PolitiFact fact checker, Paul Specht, either didn't notice or did not think the context was important enough to share with his readers.

It's okay for PolitiFact to nitpick whether Cooper had truly refrained from leaving the governor's mansion. The GOP tweets may have left a false impression on that point. But PolitiFact just as surely left a false impression that Wigginton's explanation had no grounding in fact. Specht didn't even mention the Waldo parody image.

"Pants on Fire"?

Hyperbole. Does PolitiFact have a license for hyperbole?