Of course the title of this post is intended solely to draw attention to its content. We do not think Joy Behar lies 100 percent of the time, no matter what PolitiFact or Politico say.

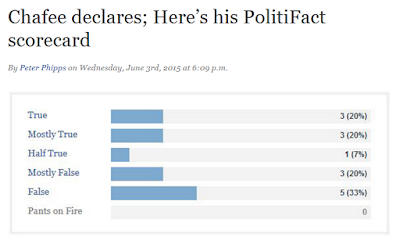

For the record, Behar's PolitiFact file as of Sept. 19, 2017:

As

we have noted over the years, many people mistakenly believe PolitiFact scorecards reasonably allow one to judge the veracity of politicians and pundits.

We posted about Behar on Sept. 7, 2017, noting that she apparently shared that mistaken view.

PolitiFact surprised us

by fact-checking Behar's statement. The fact check gave PolitiFact the opportunity to correct Behar's core misperception.

Unfortunately, PolitiFact and writer Joshua Gillin blew the opportunity.

A representative selection of statements?

Critics of PolitiFact,

including PolitiFact Bias, have for years

pointed out the obvious problems with treating PolitiFact's report cards as a means of judging general truthfulness. PolitiFact does not choose its statements in way that would ensure a representative sample, and an abundance of doubt surrounds the accuracy of the

admittedly subjective ratings.

Gillin's fact check rates Behar's conclusion about Trump's percentage of lies "False," but he succeeds in tap-dancing around each of the obvious problems.

Let Fred Astaire stand aside in awe (bold emphasis added):

It appeared that Behar was referring to Trump’s PolitiFact file, which tracks every statement we’ve rated on the Truth-O-Meter. We compile the results of a person's most interesting or provocative statements in their file to provide a broad overview of the kinds of statements they tend to make.

Focusing on a person's most interesting or provocative statements will never provide a broad overview of the kinds of statements they tend to make. Instead, that focus will provide a collection of the most interesting or provocative statements the person makes, from the point of view of the ones picking the statements. Gillin's statement is pure nonsense, like proposing that sawing segments from a two-by-four will tend to help lengthen the two-by-four. In neither case can the method allow one to reach the goal.

Gillin's nonsense fits with a pattern we see from PolitiFact. Those in charge of PolitiFact will occasionally admit to the problems the critics point out, but PolitiFact's daily presentation

obscures those same problems.

Gillin sustains the pattern as his fact check proceeds.

When is a subjective lie an objective lie?

In real life, the act of lying typically involves an intent to deceive. In PolitiFact's better moments, it

admits the difficulty of appearing to accuse people of lying. In a nutshell, it's very dicey to state as fact a person was lying unless one is able to read minds. But PolitiFact apparently cannot resist the temptation of judging lies, or at least the temptation of

appearing to make those judgments.

Gillin (bold emphasis added):

Behar said PolitiFact reported that "95 percent of what (Trump) says is a lie."

That’s a misreading of Trump’s file, which notes that of the 446 statements we’ve examined, only 5 percent earned a True rating. We’ve rated Trump’s statements False or Pants On Fire a total of 48 percent of the time.

The definitions of our Truth-O-Meter ratings make it difficult to call the bulk of Trump’s statements outright lies. The files we keep for people's statements act as a scorecard of the veracity of their most interesting claims.

Is Gillin able to read minds?

PolitiFact's fact checks, in fact, do not provide descriptions of reasoning allowing it to judge whether a person used intentionally deceptive speech.

PolitiFact's report cards tell readers only how PolitiFact rated the claims it chose to rate, and as PolitiFact's definitions do not mention the term "lie" in the sense of willful deception, PolitiFact ought to stick with calling low ratings "falsehoods" rather than "lies."

Of course Gillin fails to make the distinction clear.

We are not mind readers. However ...

Though we have warned about the difficulty of stating as fact that a person has engaged in deliberate deception, there are ways one may reasonably suggest it has occurred.

If good evidence exists that a party is aware of information contradicting that party's message and the party continues to send that same message anyway, it is reasonable to conclude that the party is (probably) lying. That is, the party likely engages in willful deception.

The judgment should not count as a matter of fact. It is the product of analysis and may be correct or incorrect.

Interviews with PolitiFact's principal figures often make clear that

judging willful deception is not part of their fact-checking process. Yet PolitiFact has a 10-year history of blurring the lines around its judgments, ranging from the "Pants on Fire" rating ("Liar, liar, pants on fire!") for "ridiculous" claims, to articles like Gillin's that skip opportunities to achieve message clarity in favor of billows of smoke.

In between the two, PolitiFact has steadfastly avoided establishing a habit of attaching appropriate disclaimers to its charts and graphs.

Why not continually remind people that the graphs only cover what PolitiFact has rated after judging it interesting or provocative?

We conclude that PolitiFact wants to imply that some politicians habitually tell intentional falsehoods while maintaining its own plausible deniability. In other words, the fact checkers want to judge people as liars under the deceptive label of nonpartisan "fact-checking" but with enough wiggle room to help shield it from criticism.

We think that is likely an intentional deception. And if it is intentional, then PolitiFact is lying.

Why would PolitiFact engage in that deception?

Perhaps it likes the influence it wields on some voters through the deception. Maybe it's just hungry for click$. We're open to other explanations that might make sense of PolitiFact's behavior.