It's that time of year again, when the supposedly non-partisan and unbiased folks at PolitiFact prepare an op-ed about the most significant lie of the year, PolitiFact's "Lie of the Year" award.

At PolitiFact Bias we have made a tradition of handicapping PolitiFact's list of candidates.

So, without further ado:

To the extent that Democrats think Trump's messaging on immigration helped Republicans in the 2018 election cycle, this candidate has considerable strength. I (Jeff can offer his own breakdown if he wishes) rate this entry as a 6 on a scale of 1-10 with 10 representing the strongest.

This claim involving Saudi Arabia qualifies as my dark horse candidate. By itself the claim had relatively little political impact. But Trump's claim relates to the murder of Saudi journalist (and U.S. resident) Jamal Khashoggi. Journalists have disproportionately gravitated toward that issue. Consonant with journalists' high estimation of their own intelligence and perception, this is the smart choice. 6.

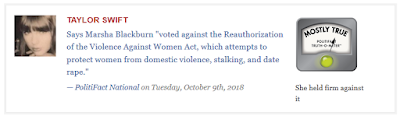

This claim has much in common with the first one. It deals with one of the key issues of the 2018 election cycle, and Democrats may view this messaging as one of the reasons the "blue wave" did not sweep over the U.S. Senate. But the first claim came from a popular ad. And the first claim was rated "Pants on Fire" while PolitiFact gave this one a mere "False" rating. So this one gets a 5 from me instead of a 6.

PolitiFact journalists may like this candidate because it undercuts Trump's narrative about the success of his economic policies. Claiming U.S. Steel is opening new plants after Trump slapped tariffs on aluminum and steel makes the tariffs sound like a big success. But not so much if there's no truth to it. How significant was it politically? Not so much. I rate this one a 4.

If this candidate carries significant political weight, it comes from the way the media narrative contradicting Trump's claim helped lead to the administration's reversal of its border policy. That reversal negated, at least to some extent, a potentially effective Democratic Party election-year talking point. I rate this one a 5.

That's five from President Trump. Are PolitiFact's candidates listed "in no particular order"? PolitiFact does not say.

Bernie Sanders' claim about background checks for firearm purchases was politically insignificant. Pickings from the Democratic Party side were slim. Democrats only had about 12 false ratings through this point in 2018, including "Pants on Fire" ratings. Republicans had over 80, for comparison. I give this claim a 1.

As with the Sanders' claim, the one from Ocasio-Cortez was politically insignificant. It was ignorant, sure, but Ocasio-Cortez was guaranteed to win in her district regardless of what she said. Her statement would have been just as significant politically if she said it to herself in a closet. This claim, like Sanders', rates as a 1.

Is this the first time a non-American made PolitiFact's list of candidates? This claim ties into the same subject as last year's winner, Russian election interference. About last year's selection I predicted "PolitiFact will hope the Mueller investigation will eventually provide enough backing to keep it from getting egg on its face." One year later it remains uncertain whether the Mueller investigation will produce a report that shows much more than the purchase of some Facebook ads. If and only if the Russia story gets new life in December will PolitiFact make this item its "Lie of the Year." I give this item a 4, with a higher ceiling depending on the late 2018 news narrative.

This claim from one of Trump's economic advisors rates about the same as Ocasio-Cortez's claim on its face. I think Kudlow may have referred to deficit projections and not deficits. But that aside, this item may appeal to PolitiFact because it strikes at the idea that tax cuts pay for themselves. Democrats imagine that Republicans commonly believe that (it may be true--I don't know). So even though this item should rate in the same range as the Sanders and Ocasio-Cortez claims I will give it a 4 to recognize its potential appeal to PolitiFact's left-leaning staff. It has a non-zero chance of winning.

Afters

A few notes: Once again, PolitiFact drew only from claims rated "False" or "Pants on Fire" to make up its list of candidates. President Obama's claim about Americans keeping their health insurance plans remains the only candidate to receive a "Half True" rating.

With five Trump statements among the 10 nominees we have to allow that PolitiFact will return to its ways of the past and make "Trump's statements as president" (or something like that) the winner.

Jeff Adds:

Knowing PolitiFact's Lie of the Year stunt is more about generating teh clickz as opposed to a function of serious journalism or truth seeking, my pick is the Putin claim.

The field of candidates is, once again, intentionally weak outside of the Putin rating. Of all the Pants on Fire ratings they passed out to Trump this year, PolitiFact filled the list with claims that were simply False (and this is pretending there's some objective difference between any of PolitiFact's subjective ratings.)

Giving the award to Bernie won't generate much buzz, so you can cross him off the list.

It's doubtful the nonpartisan liberals at PolitiFact would burden Ocasio-Cortez with such an honor when she's already taking well-deserved heat for her frequent gaffes. And as far as this pick creating web traffic, I submit that AOC isn't nearly as talked about in Democrat circles as the ire she elicits from the right would suggest. That said, she should be considered a dark horse pick.

It's not hard to imagine PolitiFacter Aaron Sharockman cooking up a scheme during a Star Chamber session to pick AOC as an attempt at outreach to conservative readers and beefing up their "we pick both sides!" street cred (a credibility, by the way, that only PolitiFact and the others in their fishbowl of liberal confirmation bias actually believe exists.)

More people in America know Spencer Pratt sells healing crystals than have ever heard of Larry Kudlow. You can toss this one aside.

I let readers down and I embarrassed myself. As I repeatedly and mockingly point out to fact checkers: Confirmation bias is a helluva drug. I was convinced of the winner, and I ignored information that didn't support that outcome.

I regret that I didn't dismiss it with a coherent argument. My bad.-Jeff]

Putin is the obvious pick. Timed perfectly with the release of the Mueller report, it piggybacks onto the Russian interference buzz. Additionally, it allows ostensibly serious journos to include PolitiFact's Lie of the Year piece into their own articles about Russian involvement in the election (the catnip of liberal click-bait.) It gets bonus points for confirming for PolitiFact's Democrat fan base that Trump is an illegitimate president that stole the election.

The Putin claim has everything: Anti-Trump, stokes Russian interference RT's and Facebook shares, and gets links from journalists at other news outlets sympathetic to PolitiFact's cause.

The only caveat here is if PolitiFact continues their recent history of coming up with some hybrid, too-clever-by-half Lie of the Year winner that isn't actually on the list. But even if they do that the reasoning is the same: PolitiFact is not an earnest journalism outlet engaged in fact spreading. PolitiFact exists to get your clicks and your cash.

Don't believe the hype.

Updated: Added Jeff Adds section 1306 PST 12/10/2018 - Jeff

Edit: Corrected misspelling of Ocasio-Cortez in Jeff Adds portion 2025 PST 12/10/2018 - Jeff

Updated: Strike-through text of Hogg claim analysis in Jeff Adds section, added three paragraph mea culpa defined by brackets 2157 PST 12/12/2018 -Jeff